Lessons of conventional imaging let scientists see around corners

Along with flying and invisibility, high on the list of every child’s aspirational superpowers is the ability to see through or around walls or other visual obstacles.

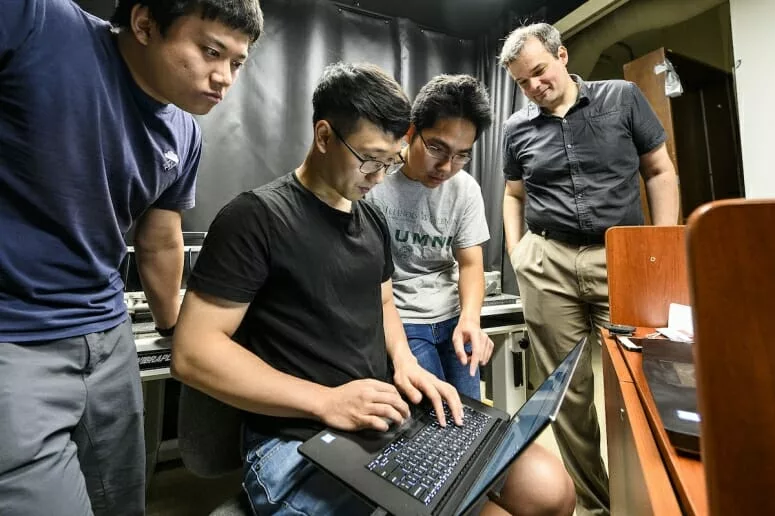

That capability is now a big step closer to reality as scientists from the University of Wisconsin School of Medicine and Public Health, UW–Madison and the Universidad de Zaragoza in Spain, drawing on the lessons of classical optics, have shown that it is possible to image complex hidden scenes using a projected “virtual camera” to see around barriers.

The technology is described in a report in the journal Nature. Once perfected, it could be used in a wide range of applications, from defense and disaster relief to manufacturing and medical imaging. The work has been funded largely by the military through the U.S. Defense Department’s Advanced Research Projects Agency (DARPA) and by NASA, which envisions the technology as a potential way to peer inside hidden caves on the moon and Mars.

Technical challenges have stymied previous efforts

Technologies to achieve what scientists call “non-line-of-sight imaging” have been in development for years, but technical challenges have limited them to fuzzy pictures of simple scenes. Challenges that could be overcome by the new approach include imaging far more complex hidden scenes, seeing around multiple corners and taking video.

“This non-line-of sight imaging has been around for a while,” says Andreas Velten, PhD, a professor of biostatistics and medical informatics in the UW School of Medicine and Public Health and the senior author of the new Nature study. “There have been a lot of different approaches to it.”

The basic idea of non-line of-sight imaging, Velten says, revolves around the use of indirect, reflected light, a light echo of sorts, to capture images of a hidden scene. Photons from thousands of pulses of laser light are reflected off a wall or another surface to an obscured scene and the reflected, diffused light bounces back to sensors connected to a camera. The recaptured light particles or photons are then used to digitally reconstruct the hidden scene in three dimensions.

“We send light pulses to a surface and see the light coming back, and from that we can see what’s in the hidden scene,” Velten explains.

Recent work by other research groups has focused on improving the quality of scene regeneration under controlled conditions using small scenes with single objects. The work presented in the new Nature report goes beyond simple scenes and addresses the primary limitations to existing non-line-of-sight imaging technology, including varying material qualities of the walls and surfaces of the hidden objects, large variations in brightness of different hidden objects, complex inter-reflection of light between objects in a hidden scene, and the massive amounts of noisy data used to reconstruct larger scenes.

Together, those challenges have stymied practical applications of emerging non-line-of-sight imaging systems.

Addressing the problem from a different angle

Velten and his colleagues, including Diego Gutierrez of the Universidad de Zaragoza, turned the problem around, looking at it through a more conventional prism by applying the same math used to interpret images taken with conventional line-of-sight imaging systems. The new method surmounts the use of a single reconstruction algorithm and describes a new class of imaging algorithms that share unique advantages.

Conventional systems, notes Gutierrez, interpret diffracted light as waves, which can be shaped into images by applying well known mathematical transformations to the light waves propagating through the imaging system.

In the case of non-line-of-sight imaging, the challenge of imaging a hidden scene, says Velten, is resolved by reformulating the non-line-of-sight imaging problem as a wave diffraction problem and then using well-known mathematical transforms from other imaging systems to interpret the waves and reconstruct an image of a hidden scene. By doing this, the new method turns any diffuse wall into a virtual camera.

“What we did was express the problem using waves,” says Velten, who also holds faculty appointments in UW–Madison’s Department of Electrical and Computer Engineering and the Department of Biostatistics and Medical Informatics, and is affiliated with the Morgridge Institute for Research and the UW–Madison Laboratory for Optical and Computational Instrumentation. “The systems have the same underlying math, but we found that our reconstruction is surprisingly robust, even using really bad data. You can do it with fewer photons.”

Using the new approach, Velten’s team showed that hidden scenes can be imaged despite the challenges of scene complexity, differences in reflector materials, scattered ambient light and varying depths of field for the objects that make up a scene.

Real-world applications within reach

The ability to essentially project a camera from one surface to another suggests that the technology can be developed to a point where it is possible to see around multiple corners: “This should allow us to image around an arbitrary number of corners,” says Velten. “To do so, light has to undergo multiple reflections and the problem is how do you separate the light coming from different surfaces? This ‘virtual camera’ can do that. That’s the reason for the complex scene: there are multiple bounces going on and the complexity of the scene we image is greater than what’s been done before.”

According to Velten, the technique can be applied to create virtual projected versions of any imaging system, even video cameras that capture the propagation of light through the hidden scene. Velten’s team, in fact, used the technique to create a video of light transport in the hidden scene, enabling visualization of light bouncing up to four or five times, which, according to the Wisconsin scientist, can be the basis for cameras to see around more than one corner.

The technology could be further and more dramatically improved if arrays of sensors can be devised to capture the light reflected from a hidden scene. The experiments described in the new Nature paper depended on just a single detector.

In medicine, the technology holds promise for things like robotic surgery. Now, the surgeon’s field of view is restricted when doing sensitive procedures on the eye, for example, and the technique developed by Velten’s team could provide a more complete picture of what’s going on around a procedure.

In addition to helping resolve many of the technical challenges of non-line-of-sight imaging, the technology, Velten notes, can be made to be both inexpensive and compact, meaning real-world applications are just a matter of time.

By Terry Devitt, University Communications

This work was funded by DARPA through the DARPA REVEAL project (HR0011-16-C-0025), the NASA NIAC program, the AFOSR Young Investigator Program (FA9550-15-1-0208), the European Research Council (ERC) under the EU’s Horizon 2020 research and innovation program (project CHAMELEON, grant No 682080), the Spanish Ministerio de Economía y Competitividad (project TIN2016-78753-P), and the BBVA Foundation (Leonardo Grant for Researchers and Cultural Creators). Results of this work have been disclosed to the Wisconsin Alumni Research Foundation for purposes of intellectual property protection.

This work was funded by DARPA through the DARPA REVEAL project (HR0011-16-C-0025), the NASA NIAC program, the AFOSR Young Investigator Program (FA9550-15-1-0208), the European Research Council (ERC) under the EU’s Horizon 2020 research and innovation program (project CHAMELEON, grant No 682080), the Spanish Ministerio de Economía y Competitividad (project TIN2016-78753-P), and the BBVA Foundation (Leonardo Grant for Researchers and Cultural Creators). Results of this work have been disclosed to the Wisconsin Alumni Research Foundation for purposes of intellectual property protection.